HTML

16 Best SEO Practices For Web Developers & Search Marketers

16 Best SEO Practices For Web Developers & Search Marketers. Who needs to deal with site enhancement features & abstain from re-trying things after the end of development?

Technical Optimization is more important for Web Developers & Search Marketers. Here is the list of the top 16 best On-Page/On-Site and Technical SEO tactics for Web Developers.

This is for developers who want to take care of website advancement features and abstain from re-trying things after the finish of development. Every company looking to hire web developers should learn basic SEO concepts to avoid technical errors or Crawl Errors as per Google or other search engines.

The web development team discusses and creates a project’s architecture and business logic. But Site promotion is handled by the SEO or Digital Marketing team. If the Developers could not balance with the SEO Experts Team, that time has many issues. After Deployment, we need to take care and make edits of pages; These page edits may include changes to meta tags, title attributes, ALT texts, Permalink structure, Crawling errors & Site Structure, etc.

What search engines or web crawlers are expecting from Web Developers & Designers and what things do we have to do?

As a web developer, you should focus on On-Page Search Engine Optimization (SEO) and Technical SEO. It happens on a webpage or website.

The UI/UX, Crawlability, Site Structure, Indexation, Canonicalization, Hreflang, Site Speed, Mobile-Friendly, HTML Markup, HTTPS status codes, Broken links Sitemaps, Robots.txt, Meta title & description length, and rendering phase of the websites are the most important concepts behind the web application development process. It will help to boost the 60% of the website SEO and helps to improve the conversions.

The above tactics will help to optimize the site for better visibility on Google search results. There is a lot that we have to perform daily with problem-solving. As a web developer, you should take responsibility for the website.

If it is making a delay in rendering the web pages, you have to check and solve the issues. These issues are coming due to the lack of SEO knowledge for Web Developers & Designers.

List of The 16 Best SEO Practices for Web Developers & Search Marketers

Table of Contents

1. Good UI/UX (User Interface/User Experience) with Beautiful Appearances

Search Marketers are saying content is ‘King’ when it comes to Search Engine Optimization (SEO). But UI/UX (User Interface/User Experience) is an integral aspect of promoting content on websites. Graphics and Good UI/UX (User Interface/User Experience) with beautiful appearances make your website more Attractive.

A great website with useful UI and UX is beneficial for creating impressions and boosting your conversions. UI/UX plays an important role together with content optimization to get goals & conversions from the end-users.

If you are targeting end-user optimization, You need to have both Contents with a good User Interface/User Experience. Good UI/UX creates impressions and gets more conversions. a bit of creative effort, which aids in boosting your conversions.

UI and UX are crucial for Search Engine Optimization (SEO). Every developer should have to choose good UI/UX for better SEO.

2. Mobile Responsive and Optimization

User & Mobile-Friendly responsive websites improved User Experience (UX). It is helpful to make it easy for users to find what they want.

Visitors want a website that’s quick to navigate and access. Mobile Optimization means making the website responsive and mobile-friendly.

Mobile responsive websites increase users as well as mobile-friendliness. Visitors want a beautiful and responsive structured page layout that improves the CTR.

Worldwide, 52.2% of all web traffic was generated through mobile phones. This is why we need to optimize our mobile phones. Mobile responsive websites improve User-friendliness to users. Every developer should have to choose a good UI/UX responsive mobile-friendly design for better SEO.

3. Page Speed or Site Speed for SEO

Site/Page speed also matters for Google and other search engines. It is one of the ranking factors. Google has a new page called Test Your Mobile speed tool that is focused on mobile.

Google’s provides PageSpeed Insights tool gives an analysis of their site speed and recommendations for site improvement. Is your web page mobile-friendly? You can also try the mobile-friendly test of your website.

Using Google’s AMP – (Accelerated Mobile Pages) will improve site/page speed on both desktop and mobile devices. It is not a ranking factor, but Site/Page speed is a Google ranking factor.

Faster load web pages lead to reduces bounce rates and improve mobile SEO rankings. Every developer should have to improve the site speed for better SEO.

Google launches a new portal to help web developers to build modern web applications with best practices. It will Measure and show detailed guidance of the websites.

The web.dev helps web developers like you learn and apply the web’s new abilities to your websites and applications. OnlyPDF – PDF TO Word & Word to PDF transformation tool for everything you need to streamline your workforce.

4. HTML Markup Validation

For those who are unfamiliar, W3C stands for World Wide Web Consortium (W3C), a company that develops standards for code on the web. Markup Validation means checking the HTML code for proper markup thus making sure that all web pages of a website are built with Web standards or not.

Many developers are unfamiliar with W3C standards. W3C (World Wide Web Consortium) company that develops standards for code on the web (www).

W3C compliance is NOT an SEO factor to Google, but it validates the HTML tags, which means proper tags with content show good UI/UX.

The SEO industry knows that we can make use of other image attributes, titles, and other attributes that are most important for SEO. As per W3C, without using these ALT and title attributes are not valid markup and not a web standard.

If a website is so clumsily up in the validation process, it’s even possible that Google won’t also be able to index it. This means every developer should maintain the W3C web standards better for SEO practices when design/developing. Every developer needs to follow the W3C web standards for better SEO.

5. Titles, Meta Description, Image alt tag, and title tag optimization

Metadata is the most important part of SEO. It will give the identity of the web pages. It will give the shape and structure of the SERPs. The meta tags are helpful to rank faster in google as well as the name of the web pages. Using meta tags with keywords rank higher in all search engines.

Title and Meta Description Length:

The length of the title tags or page titles is good for SEO; depending on the devices, Google may display 60-65 characters. It is a good idea to keep key information within the range (60-65 characters).

The length of the search snippets is now reverted to their old shorter snippets (between 150-155 characters). 320 characters of a meta description, which is outdated already (this limit increased in December 2017), and a new meta description is updated in may 2018 fixed between 150-155 characters. Yoast SEO Snippet editor also supports 155 characters of the meta description.

Google’s Danny Sullivan confirmed that Google, indeed, has changed the meta descriptions.

Our search snippets are now shorter on average than in recent weeks, though slightly longer than before a change we made last December. There is no fixed length for snippets. Length varies based on what our systems deem to be most useful.

— Danny Sullivan (@dannysullivan) May 14, 2018

Read More: https://moz.com/blog/how-to-write-meta-descriptions-in-a-changing-world

The length of the meta descriptions depends on the devices Google may display between 150-155 characters. It is a good idea to keep key information within the range (150-155 characters). Every developer should maintain the correct length of the title and description for better SEO.

H1, H2, H3 headings tags:

Heading Tags (H1) are indicating the most important content of the web pages as well as Search engines. It will help to rank better in SERPs. The H1 tag is the most important part of On-Page SEO. It will help to rank better in Google search results.

H1-H6 headings tags are plays a crucial role in both search engines and Web Users as well. It makes the excellent layout and UX of the web pages. It can give the headings of the web pages.

Duplicate Content and Metadata:

Duplicate content is content that appears on many places or many web pages or across domains on the Internet. search engines can crawl URLs with identical or similar content on multiple URLs, which may cause a number of SEO problems.

If your website contains multiple pages with largely identical content, there are a number of ways you can indicate your preferred URL to Google or You can use a canonical URL This process of avoiding duplicate content is called canonicalization.

Meta Descriptions are helpful to increase the Click Through Rate (CTR) on search engine results pages (SERPs). It doesn’t influence page ranking directly, only the relevance of meta description influence page CTR, which is very important.

Google announced in September 2009 that both meta descriptions and meta keywords aren’t a ranking factor for Google’s Search Results.

Search Engines pick meta descriptions as search snippets; There is no guarantee that search engines like Google will use the web page meta description as the search snippet.

Google can adjust the meta descriptions based on the user’s query. It is not the ranking factor, but it will help to increase the Clicks in SERPs.

Image SEO: alt tag, and title tag optimization

Images/pictures play a major role in the website and increase the UX (User Experience) of the website. It is an important part of content marketing.

With the help of alt and title tags, we can optimize images on Google. It will help to rank better in google search results. Using keyword phrases in alt and title tags is better for SEO.

Choose Images/pictures related to the topics and Use Concept related keywords in images alt and title tags are better for SEO.

Structural links for crawling (internal & external links):

Internal linking is good and makes a habit for better rankings. It will reduce the Bounce rate, keep and stay users a long time on the website will be good for rankings. Giving a link back improves the site rankings.

If you have any topic related to that keyword on the page, giving a link back will help to do better for search engine rankings. The habit of internal linking, visitors, stay a long time on the website, and it boosts your SEO.

External links as an on-page rankings factor will ranks higher in google. Using External Links to authoritative websites will be good for rankings in search results.

External Links contain web pages that rank better than those without external links in google search results. It is one of the Google ranking factors.

6. Structure data by schema.org (Microdata, RDFa, or JSON-LD)

Structured data & schema are becoming more important for search engine optimization (SEO). Structured data will helps to rank faster in google search results.

Microdata, RDFa, or JSON-LD are three types of Structured data formats but are mostly recommended by JSON-LD format for better SEO. Google also recommended JSON-LD format structure data & schema. It also gives the identity and structure design in Google Search engine search results.

Those are Knowledge Graph, rich snippets, rich cards, feature snippets, top stories carousel, rich reviews, video snippets News and topical articles, site links search, etc.

Structured data is a powerful feature to make Search engine snippets, and it will help to rank faster in google search results. It snippets help to increase the click and conversions.

7. Robots.txt File vs Robots meta tag vs X-Robots-Tag HTTP header

Robots.txt File, Robots meta tag, and X-Robots-Tag? are used to control or communicate what search engine spiders do on your website or web application.

By using robots, we can handle and give instructions to web spiders or bots. Robots.txt File, Robots meta tag, and X-Robots-Tag? Are used for websites or web applications to communicate with web crawlers (bots).

It is used for the purpose of web indexing or spidering. It will help the website that ranks as highly as possible by the search engines.

The Robots.txt File, Robots meta tag, and X-Robots-Tag? can be used for Indexation-controlling for the search engine crawlers.

Robots.txt is a standard text file that is used for websites or web applications to communicate with web crawlers (bots). It is used for the purpose of web indexing or spidering. It will help the site that ranks as highly as possible by the search engines.

Robots Meta Tag is used to Indexation-controlling the web page. It is a piece of code that is in the tag of the HTML document. Meta robots tag tells what pages you want to hide (noindex) and what pages you want them to index from search engine crawlers. Meta robots tag tells search engines what to follow and what not to follow in the content of the web pages or website.

If you don’t have a Robots Meta Tag on your site, don’t panic. By default is “INDEX, FOLLOW,” the search engine crawlers will index your website and will follow links.

X-Robots-Tag HTTP header is a simple alternative for Robots.txt and Robots Meta Tag. It is used to control more data in the Robots.txt file and Robots Meta Tag.

The X-Robots-Tag is a part of the HTTP header to control the indexing of a web page or website. It can be used as an element of the HTTP header response for a given URL of the web page.

By the Robots Meta Tag is not possible to control the non HTML files such as Adobe PDF files, Flash, Image, video and audio files, and other types. With the help of X-Robots-Tag, we can easily control the non-HTML files.

In PHP header() function is used to send a raw HTTP header. This would prevent search engines from showing files and following the links on those pages you’ve generated with PHP; you could add the following in the head of the header.php file:

header("X-Robots-Tag: noindex, nofollow", true);

With the help of Apache or Nginx server configuration files or a .htaccess file. we can control the X-robots-tag

On Apache servers, you should add the following lines to the Apache server configuration file or a .htaccess file.

<FilesMatch ".(doc|pdf)$">

Header set X-Robots-Tag "noindex, noarchive, nosnippet"

On the Nginx server, you should add the following lines to the Nginx server configuration file.

location ~* \.(doc|pdf)$ {

add_header X-Robots-Tag "noindex, noarchive, nosnippet";

}

8. XML Sitemaps

XML Sitemaps are a powerful tool that helps to index your web pages in google. It is a part of the SEO-friendly website for better Indexation in google. It is important to submit an XML sitemap to Google Search Console will be better for SEO.

Google needs to crawl every relevant page of your site, but some important pages are not able to crawl this is why we need XML Sitemaps, to make it easier to crawl and index your web pages in google search results. It is necessary to have XML sitemaps for the website is better for SEO.

Google ranks web pages. This is why sitemaps make it easier to index and give rankings in Google search results.

Sitemaps allow web crawlers to crawl and index web pages. It notifies Google or any other search engines to read and index the web pages. It enhances and boosts the rankings in Google SERPs.

There are different types of Sitemaps

- HTML Sitemap

- XML Sitemap

- Image Sitemap

- Video sitemap

- News Sitemap

- Mobile-Sitemap

The Sitemaps allow bots to crawl all the page URLs, images, documents, videos, and news data on the website and improve the SEO.

9. Canonical tags and Hreflang tags

Canonical tag URLs (rel=canonical attribute) play a major role in avoiding Duplicate content on web pages. With the help of the rel=canonical tag, bots can easily understand which one is the original and which duplicate content is on website pages.

Canonicalization is the process of avoiding duplicate content on website pages. A canonical tag is a way of telling search engines that a specific URL represents the original copy of a page. If the website has similar or duplicate pages, Consolidate duplicate URLs define with the help of the canonical link tag element.

Search engines support using the rel=”canonical” link element across different websites across different domains (like as primary domain, the subdomain, and other domains on the server). Similarly, duplicate content appears on the cross-domain URLs, for example. The tags will helps to avoid Cross-Domain Content Duplication.

Hreflang tags also play an important role in avoiding duplicate content based on specific languages. Hreflang tags help to indicate Google and Yandex search engines in what language the content of a page is written and also target and Optimized the content for international users. These tags will helps to search engines serve the right content to the right user.

It will help to target international language-based users. It will help to Tell Google about localized versions of your web pages and also targets and Optimize the content for global users.

Certain search engines, like Bing, do not support the Hreflang Tags (rel=”alternate” hreflang=”x”) annotations.

The below four methods can be utilized to help these search engines figure out which language is being focused on.

1. HTML meta element or <link> tags

<meta http-equiv=”content-language” content=”en-us” />

<link rel=”alternate” href=”https://www.example.com/” hreflang=”en-US” />

2. HTTP headers response

HTTP/1.1 200 OK

Content-Language: en-us

3. <html> tag language attribute

<html lang=”en-us”>

…

</html>

4. Represents in XML Sitemap

<?xml version=”1.0″ encoding=”UTF-8″?>

<urlset xmlns=”https://www.sitemaps.org/schemas/sitemap/0.9″ xmlns:xhtml=”https://www.w3.org/1999/xhtml”>

<url>

<loc>https://www.example.com/</loc>

<xhtml:link rel=”alternate” hreflang=”en” href=”https://www.example.com/”/>

<xhtml:link rel=”alternate” hreflang=”de” href=”https://www.example.com//de”/>

<xhtml:link rel=”alternate” hreflang=”fr” href=”https://www.example.com//fr”/>

</url>

<url>

<loc>https://www.example.com//de</loc>

<xhtml:link rel=”alternate” hreflang=”en” href=”https://www.example.com/”/>

<xhtml:link rel=”alternate” hreflang=”de” href=”https://www.example.com//de”/>

<xhtml:link rel=”alternate” hreflang=”fr” href=”https://www.example.com//fr”/>

</url>

<url>

<loc>https://www.example.com//fr</loc>

<xhtml:link rel=”alternate” hreflang=”en” href=”https://www.example.com/”/>

<xhtml:link rel=”alternate” hreflang=”de” href=”https://www.example.com//de”/>

<xhtml:link rel=”alternate” hreflang=”fr” href=”https://www.example.com//fr”/>

</url>

</urlset>

10. Open Graph and Twitter Cards

Open Graph and Twitter cards are social meta tags. The tags help to make snippets on social media sites like as below

social meta tags containing the title, meta description, feature image, categories, tags, site name URL, language, author name, etc. These tags help to UI/UX of the pages/post on social media sites.

Open Graph, and Twitter cards meta tags communicate with the social media networks to show rich snippets on social media.

Open Graph Meta Tags:

Here’s a sample of what these tags look like in standard HTML:

Twitter Cards:

Twitter cards are the same as Open graph meta tags. it will help to display post info on the Twitter network. Twitter actually gives you two types of cards that you can implement on your website:

Summary Cards: Title, description, thumbnail, and Twitter account attribution.

Summary Cards with Large Image: Similar to a Summary Card, but with a prominently featured image.

To generate these types of cards for your site, you need to output the following tags in the header of your web page:

Open Graph meta tags and Twitter Cards improve the social signals and will impact SEO.

11. URL Structure or Permalink Structure

In Search Engine Optimization, URLs Optimization is one of the important SEO factors. SEO Friendly URLs are important and also a key SEO factor. Website URLs optimization is a need to make your website SEO friendly.

URL stands for Uniform Resource Locator (URL or Web Address) and it is used to specify the address location of the Web page. It means that the URLs should be human as well as search engine readable format or structure.

Every page has a unique URL; The URL has two parts one is the domain name or (hostname), and the second one is the slug or basename of the page URL. The friendly URL describes that the page URL has keywords that easily understand the users and search engines. such page URLs are said to SEO Friendly URLs, see the below example of an SEO-friendly URL.

Permalinks are the URLs of web pages or posts. Every article has a different URL, but permalinks are permanent and are valid for a long time. Permalinks are nothing but a basic format of the URLs.

WordPress can able to do everything for you automatically. WordPress will always flush the Rewrite URLs or permalinks whenever you update your permalink structure. Simply update your permalinks when you make changes to your code. On the WordPress Dashboard » Settings » Permalinks you can update the permalink structure.

Keeping URLs as simple, relevant, and accurate as possible is leading to getting both the users and search engines can easily understand.

12. HTTP response status codes (301/302/404)

HTTP defines a group of request methods to indicate the desired action to be performed on the identified resource. HTTP status codes are the request and responses between the server and the client (Browser).

when we enter the URL in the browser address bar, A client (Browser) sends an HTTP request to the server; then, the server sends a response to the client.

The response from the server contains status information (such as 200 OK). It includes the type, date, and size of the file of data sent back by the server.

HTTP Responses are divided into five classes: informational responses ( 1xx), successful responses ( 2xx), redirects ( 3xx), client errors ( 4xx), and server errors ( 5xx).

Different Types of HTTP Response Status Codes (HTTP Status Codes):

1xx: Informational – Request received, continuing process.

2xx: Success – The action was successfully received, understood, and accepted.

3xx: Redirection – Further action must be taken in order to complete the request.

4xx: Client Error – The request contains bad syntax or cannot be fulfilled.

5xx: Server Error – The server failed to fulfill an apparently valid request.

HTTP Status Codes play a useful role in SEO; Every web page should be 200 OK Response is better for SEO.

The 301/302 redirections have happened when you change or delete the page URL. It causes 404 error pages, and In this situation, we need to add a related page URL by using the 301/302 redirect technique.

The help of WordPress Redirection Plugin will help to add 301/302 redirection for 404 error pages.

What is a broken link or (404 Error):

A Broken link is a type of link on a web page that no longer exists. A Broken link can also be called a Dead link. The HTTP status code of the broken link or Dead link is 404: Not Found. 404: Not Found means the source could not be found on the server, or wrong or improper misspelling of the URL, or the Request page URL has been deleted.

If you want to check or find out the broken links of web pages in an easy way, there is a number of Broken link checker online tools available in the market They are:

- Google Webmaster (error pages)

- Sitechecker

- InterroBot

- Screaming Frog

- Dead Link Checker

- Xenu’s Link Sleuth

- Ahrefs Broken Link Checker etc.

WordPress will do 301 redirects for the post’s URL or Slug change. Changing a post’s slug in WordPress will automatically create 301 redirects from the old URL to the new URL. WordPress Old URL Redirecting to New URL has been done for the default post type called a post.

Google loves short URLs. SEO specialist changes long URLs to short URLs for SEO purpose. If you built backlinks by using the old URL, the URLs are broken.

It is already indexed in search engines. It will cause a 404 error, and when we change the old URL will show a 404 error. This way WordPress automatically 301 redirects old URLs to new URLs. This is why WordPress is SEO friendly.

WordPress has this suitable feature of recollecting the old URL and 301 redirecting to the new URL so that your links aren’t broken, and backlinks work fine due to this feature.

This is a cool and trendy feature in WordPress, but it can cause frustration because there are no options in the WordPress dashboard. The only way to remove these old entries is from your WordPress database directly.

Why is it important to redirect URLs for SEO?

The role of the 301 redirect in SEO is to preserve your build-up rankings and passes of the page authority from an old URL to a new URL. 301 redirections will pass as much value from the old URLs to the new URLs as possible.

If you don’t do 301 redirections may be seen as a soft 404 error page, and search engines won’t pass the authority and traffic-related over from the old URL.

Where to implement the redirections?

301 redirections is a developer’s task; ask your developers to do it. In WordPress, we can do this through plugins and in server config files like a .htaccess file. Avoid chained redirections. It will cause frustration to users as well as bots. In this situation, your browser will do chained redirections. We can observe the page will not be open. It does redirections continuously.

Redirects don’t impact SEO. However, poor execution may cause a wide range of inconveniences from the loss of page positioning to the loss of traffic in Google searches.

If you need to How To Fix Turn off or remove WordPress’s Automatic Old URL Redirecting to New URL when a post’s URL or slug changes?

Know More About:: How To Fix WordPress Old URL Redirecting to New URL?

13. HTTPS Security & Web Application Firewall

Hypertext Transfer Protocol Secure (HTTP Secure) or (HTTPS) is a secure and widely used internet communication protocol for the World Wide Web (WWW). It is the underlying network protocol beyond a computer network that enables to transfer of hypertext/hypermedia information on the World Wide Web (WWW).

The communication protocol is encrypted or encoded using Transport Layer Security (TLS), formerly, called Secure Sockets Layer (SSL). The communication protocol is also referred to as HTTP over TLS or HTTP over SSL. The current version of the HTTP specification is called HTTP/2.

HTTPS websites rank higher in google search results. Google announced in 2014 that having an SSL/TLS Encryption certificate will be considered a positive ranking signal. HTTPS encrypts and decrypts user page requests as well as the pages that are returned by the Web server.

Google prefers HTTPS sites because there tend to be faster and more secure and also increase the Your users’/visitors’ trust in the flow. It will rank higher on SERPs compared to non-secure websites.

X-Security Headers are the header part of a Hypertext Transfer Protocol (HTTP) request and response messages. They define the operating parameters of an HTTP transaction. It passes additional information with the request and response between the client (browser) and the web server. It is an integral part of HTTP requests and responses. X-Security Headers are also said as HTTP headers.

By using .htaccess techniques to increase your website’s security. X-Security Headers are protecting against cross-site scripting (XSS) attacks, Clickjacking (UI redress attack) attacks, Reducing MIME Type Security Risks, etc.

What is the .htaccess file?

.htaccess is a configuration file for use on web servers such as the Apache Web Server software. The .htaccess file can be used to alter or change or override the server configuration settings of the Apache Web Server software. It is used to make configuration changes on a per-directory basis.

It can be used to add additional functionality and features to the Apache Web Server software. The file is used to protect the server files, like password protection, redirect functionality, Ultimate Hotlink Protection, Block Worst IPs & Bad Bots, Content Protection, Protect From Clickjacking (UI redress) Attacks, Protect From Cross-Site Scripting (XSS) Attacks and many more.

14. Website Performance Optimization Via Compression & Caching

After Completing the project, before production, developers need to remove unnecessary code and optimize & compress the code. By using leverage browser caching of your website or blog, that will load faster in all browsers with less loading time and improve the conversion rates.

1. Reduced page load times can improve website speed, increase visitor time on the website, and improve conversion rates.

2. Improved Optimization score in Pingdom, GTmetrix, YSlow, and Google’s PageSpeed Insights. etc.

3. Improved user experience via Page Caching and Browser Caching.

4. Bandwidth savings via Minify and HTTP compression of HTML, CSS, JavaScript, and RSS feeds.

5. Improved server response times of the website.

Through the Website Performance Optimization process, users stay a long time on the website. It will make a better website in google searches.

15. Technical Aspects (Indexation, Crawling errors & Issues, Site Errors, and URL Errors)

Crawl errors/mistakes happen when a web crawler attempts to crawl a page on your site yet fails to crawl at it.

You will probably ensure that each link on your site prompts to an actual page. That may be by means of a 301 permanent redirect, yet the page at the blunt end of that link should always return to a 200 OK server response.

Google separates crawl errors into two groups:

1. Site errors:

Site errors/ mistakes mean your whole site can’t be crawled due to Technical SEO issues. Site errors/ mistakes causes can have many reasons, these being the most widely recognized:

» DNS errors:

This means a web crawler can’t be able to communicate with your server, for example, which means your site can’t be visited. This is typically a temporary issue.

Google will return to your site later and crawl your site at any rate. You can check error notifications in your Google Search Console in the crawl errors section.

» Server errors:

This one also checks in your Google Search Console in the crawl errors section. this means the bot wasn’t able to access your website and maybe return 500: Internal Server Error and 503: Service Unavailable.

This means your website has any server-side code errors or domain name server issues or the site is under maintenance.

» Robots failure:

Before crawling your site Googlebot or any other web crawler tries to crawl your robots.txt file first. If that Googlebot can’t come to the robots.txt file means that the site robots.txt file refuses or disallow all bots to crawl the site, Googlebot will put off the crawling until the robots.txt file allows the user agents to crawl the website.

Know More About:

What is Robots.txt File? What are the Different types of bots or Web Crawlers?

Robots.txt File vs Robots meta tag vs X-Robots-Tag

2. URL Errors:

URL errors/mistakes mean your page, which is related to one specific URL per error; they are easier to keep up and fix. URL errors mean crawl errors like soft 404 page not found errors. When the web page is not found on the server, the bots consider a 404 error not found on web browsers. 404 errors will drop your ranking and increase the bounce rate.

» How to avoid the 404 Errors on the website:

Finding similar content on another page and making it a 301 permanent redirect will help to avoid 404 errors.

» URL Related SEO Errors/Mistakes:

Website URL Structure is one of the important On-Page SEO. The wrong URL structure/permalink structure will not work better in Google.

URL Related SEO Errors/Mistakes mean a lack of keywords in the URL, Irrelevant format, and including only numbers in the URL those URLs are not SEO Friendly and create SEO-related errors/mistakes. SEO Friendly URLs will rank better in google search results.

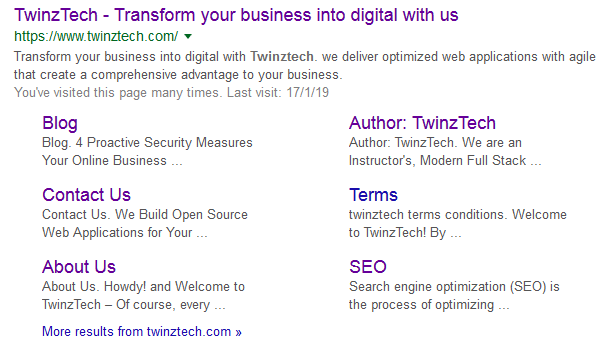

16. Google Site Search

Google Site Search brings a similar hunt innovation that powers Google.com to your site, delivering significant outcomes with lightning speed.

Use Google’s site: syntax structure followed by the site URL to confine your search to find only results within that single website.

With the help of google site search, we can check the page rankings and indexation of web pages in the google search. If the site is not available in google site search, the site has crawl & URL issues.

What we have to check & setup for Site Indexation

1. Submit the site on Google Webmasters and set up Google Analytics.

2. Check Robots meta tags, Robots.txt file, and X-robots tags (Remove noindex & nofollow if they have it).

3. create XML sitemaps and submit them to Google Webmasters.

4. Do website SEO audit and Check crawl errors & issues status.

5. Fetch and Render the pages in the URL inspection tool and check the index status.

6. Fix all Errors & issues related to SEO Audit.

In this way, we can check the index status of the websites.

Conclusion

The above SEO Practices will help to save tons of time while promoting the websites. These are the best SEO practices for web developers and search marketers. If you need to build a site that gets high rankings from web search engines like Google, Bing, Yahoo, etc., connect with us.

-

Instagram4 years ago

Instagram4 years agoBuy IG likes and buy organic Instagram followers: where to buy them and how?

-

Instagram4 years ago

Instagram4 years ago100% Genuine Instagram Followers & Likes with Guaranteed Tool

-

Business5 years ago

Business5 years ago7 Must Have Digital Marketing Tools For Your Small Businesses

-

Instagram4 years ago

Instagram4 years agoInstagram Followers And Likes – Online Social Media Platform