HTML

JavaScript SEO: Server Side Rendering and Client Side Rendering

JavaScript SEO: Server Side Rendering and Client Side Rendering. Google Search will keep on enhancing their ability to render JS pages and links at scale.

Table of Contents

1. What is JavaScript SEO?

JavaScript SEO is essentially the practice of ensuring that content on a web page (executed via JS) is being effectively rendering, indexing, performing and ultimately ranked in google or other search engine search results.

This is particularly relevant because of the expanding popularity of client-side rendering and sites based upon JavaScript frameworks.

2. The Ultimate Guide to JavaScript SEO

Javascript is a trendy and fashionable topic nowadays, more and more websites are using javascript and its frameworks and libraries like Angular.js, React.js, backbone.js, Vue.js, Ember.js, Aurelia and Polymer.

Google can crawl and render javascript in two ways

a. Client-Side Rendering (CSR):

It is a more recent type of rendering strategy, and this mainly depends on JS executed on the client side (browser) using a JavaScript framework. The client will initially ask for the source code which will have almost no indexable HTML in it, at that point a second request will be made for the .js file containing the majority of the HTML in JavaScript as strings.

b. Server-Side Rendering (SSR):

It is a standard rendering strategy, essentially the majority of your web page’s assets are housed on the server. At that point, when the page is requested, the HTML is conveyed to the browser and rendered, JS and CSS downloaded, and the last render appears to the client/bot/crawler.

3. What is Prerendering?

In case you’re utilizing a Single Page Application (SPA) for a site that is not behind a login, SEO is a critical concern. Google suggests you utilize their built-in capacity with regards to interpreting JavaScript applications, yet our proposal isn’t to confide in Google on this. We would say that is regularly still insufficient and prerendering is frequently still a need.

JavaScript is turning into the dominating language to construct web applications. Showing all your content is very important since Page Ranking not only includes relevance and the nature of content as well as thinks about regardless of whether the content is visible in a sensible measure of time.

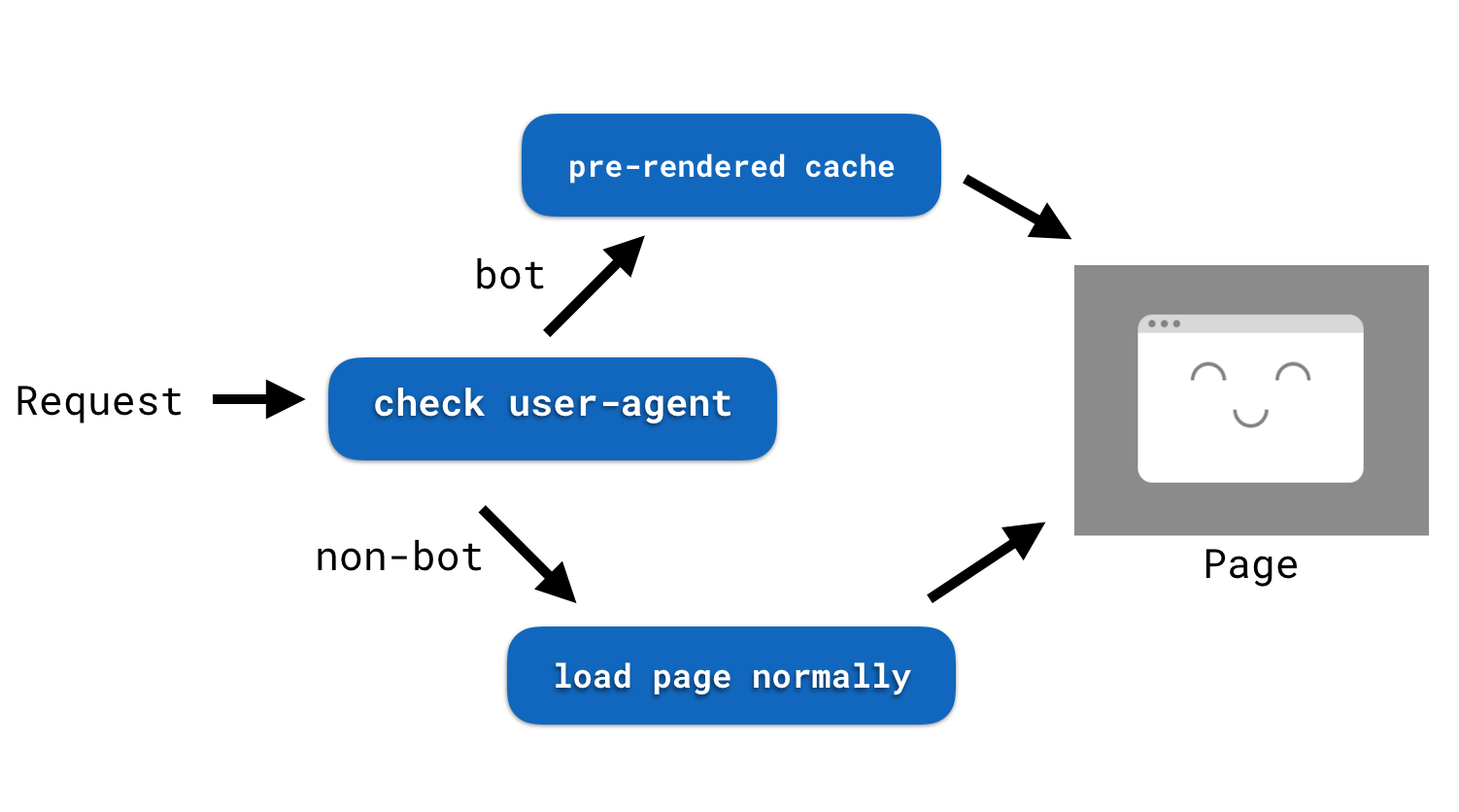

Prerendering is a procedure to preload all components on the page in anticipation of a web crawler to see it. A prerender administration service will catch a page request to check whether the user-agent (client) seeing your website is a bot/spider and if the user-agent (client) is a bot, the prerender middleware will send a cached version of your website to appear with all JavaScript, Images, and so on are rendered statically.

In the event that the user-agent (client) is definitely not a bot, everything is loaded as should be expected, prerendering is just used to optimize the experience for bots as it were.

Source: Netlify

Social network bots, for example, Facebook, Twitter, and Linked In, and so on, feature connects to your site likewise use bots the Open Graph information from the site’s metadata will load rather than a pre-cached version from prerendering.

The Main Differences between Server-side rendering (SSR) and Client-side rendering (CSR)?

Server-side rendering can be somewhat quicker at the initial request, basically on the grounds that it doesn’t require the same number of round trips to the server. Anyway, it doesn’t finish here, execution likewise depends upon some extra factors. All of which can result in radically changed server experiences

1. The web speed of the client making the demand

2. What number of active users are getting to the site at a given time

3. The physical area of the server

4. How enhanced or optimized the pages are for speed

Client-side rendering, then again, is slower at the initial request because since it’s making different round trips to the server. However, when these requests are finished, Client-side rendering offers a blazingly fast experience by means of the JS framework.

The web has moved from plain HTML – as an SEO you can embrace that. Learn from JS devs & share SEO knowledge with them. JS's not going away.

— 🍌 John 🍌 (@JohnMu) August 8, 2017

4. How does Google handle JavaScript rendering and indexing?

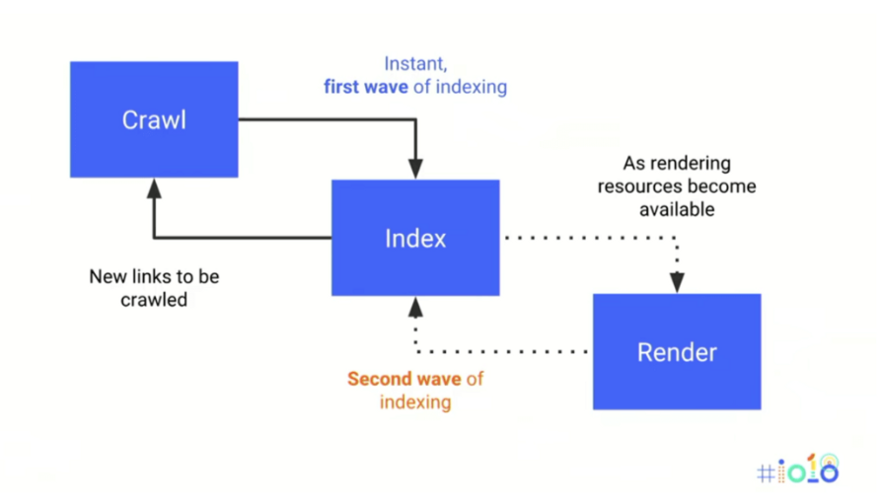

Google has recently disclosed their current two wave process for JS rendering and indexing at Google I/O.

In the first wave, crawled and indexed the HTML and CSS almost instantly, adds any present links to the crawl queue and downloads page HTTP response codes.

In the second wave, Google will come back to render and index JavaScript generated content, which can take from a few hours to over a week later.

Generally, SSR is not an issue with Google’s rendering delay because the data is all in the source code and indexed during the first wave. In CSR, where the indexable content is just disclosed at render, this sizable delay can mean the data may not be ordered or show up in search results for a considerable length of time or even weeks.

5. How JavaScript impacts SEO

There are numerous ways in which JavaScript impacts SEO, yet Bartosz’s covered four of the principal areas: indexing, crawl budget, ranking, and mobile-first.

The Chaotic JavaScript Landscape with Bartosz Goralewicz & Jon Myers | DeepCrawl Marketing Webinar

6. The Complexity of JavaScript Crawling and Rendering

The whole javascript crawling and rendering process is very complicated than HTML crawling. The Parsing, compiling, rendering and executing JS files is very time-consuming. In the case of JavaScript-rich sites, Google needs to hold up until every one of the means are done before it can list the content.

The javascript crawling and rendering process isn’t the main thing that is slower. It likewise goes into the process of finding new links. With JavaScript-rich sites, usually, Google can’t find new URLs without holding up until a page is rendered.

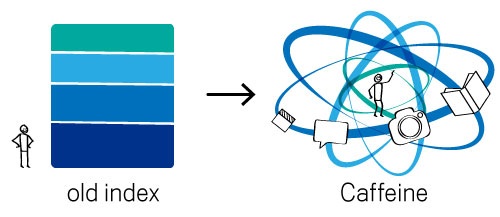

Caffeine was another web indexing system by Google. This new indexing system enabled Google to crawl, render and store information more efficiently.

The entire procedure is extremely quick.

1. Google Converts PDFs, DOCs, XLS & other similar document types into HTML for indexing

2. Google will crawl, follow and pass a value of the link within an iframe web page.

3. Google can handle JavaScript rendering and indexing of all JavaScript (JS) pages and JavaScript (JS) Links. Google will be treated the same way as links in the plain HTML document.

4. Google doesn’t understand the text embedded in an image (.jpg, .png, etc.)

5. Matt Cutts also confirmed that GoogleBot can handle AJAX POST requests and crawl AJAX to retrieve Facebook comments. Crawling and Rendering content is very tricky, but GoogleBot can do it.

6. Google improved the Flash indexing capability and also now support an AJAX crawling scheme!

@Web4Raw I say no

— Gary 鯨理/경리 Illyes (@methode) March 15, 2016

In the future, Google will recognize, Object Detection and read the text embedded in an image.

Read More: https://cognitiveseo.com/blog/6511/will-google-read-rank-images-near-future/

7. How Google Crawls a Video:

1. Google can crawl the video and extract a thumbnail and preview. It can also extract some data limited meaning from the audio and video of the document.

2. Google can extract the data from the page hosting the video, including the page text and metadata.

3. Google can utilize structured data (VideoObject) or video sitemap associated with the video.

a. In the case of traditional HTML, everything is simple and direct:

1. Google’s web crawler downloads an HTML file.

2. Google’s web crawler downloads the CSS files.

3. Google’s web crawler separates the links from the source code and can visit them at the same time.

4. Google’s web crawler sends all the downloaded resources to the search Index (Caffeine).

5. The search Index (Caffeine) indexes the web page.

b. Things get complexed when it comes to a JavaScript-based site:

1. Google’s web crawler downloads an HTML file.

2. Google’s web crawler downloads the CSS and JS files.

3. Later, Google’s web crawler has to use the Google Web Rendering Service (a new search index Caffeine) to parse, compile, render and execute a JS code.

5. Then Google Web Rendering (search index Caffeine) service fetches the data from external APIs, from the database, etc.

6. Finally, the search index Caffeine can index the content.

7. Now Google can find new links and add them to the Googlebot’s crawling and indexing queue.

Source: Elephate

8. Don’t block CSS, JavaScript, and image files

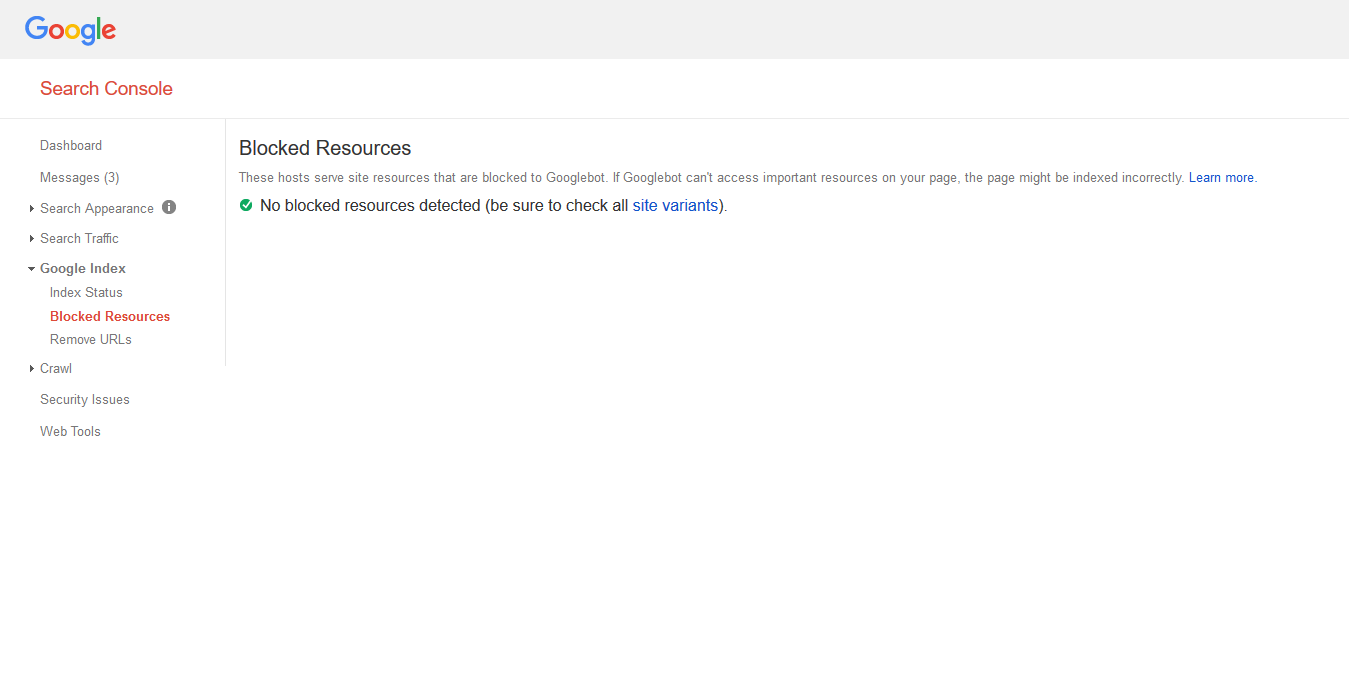

If your Blocking CSS, JavaScript, and image files, you’re preventing Google or other search engines to check if your website works properly or not. If you block CSS, JavaScript, and image files in your robots.txt file or X-Robots-Tag, Google can’t render and understand your site, might drop your rankings in search results.

SEO auditing tools like Moz, Semrush, and Ahrefs started rendering web pages and executing CSS, JavaScript, and image resources and give the results. So, don’t block CSS, JavaScript, and image files for better SEO if you need to work your favorite SEO auditing tools.

Where we can check the blocked CSS, JavaScript, and image resources.

No site-wide perspective of blocked assets or resources is accessible, yet you can see a list of blocked assets for individual URLs utilizing the URL Inspection tool

Helpful Resources:

1. How to unblock your resources

2. New ways to perform old tasks & Currently unsupported features

3. Migrating from old Search Console to new Search Console

Don’t block Googlebot or other bots from accessing your CSS, JavaScript, and image files. These files allow Google or other search engines to adequately fetch & render your site. Google will get an idea of what it will look like. If Google doesn’t know what it will look like, they can’t trust it and they can’t help your search rankings.

9. Does Google “count” links in JavaScript (JS)?

According to the Mariya Moeva (a Search Quality Team member at Google) links found in JavaScript are treated by Google the same way as links in the plain HTML document.

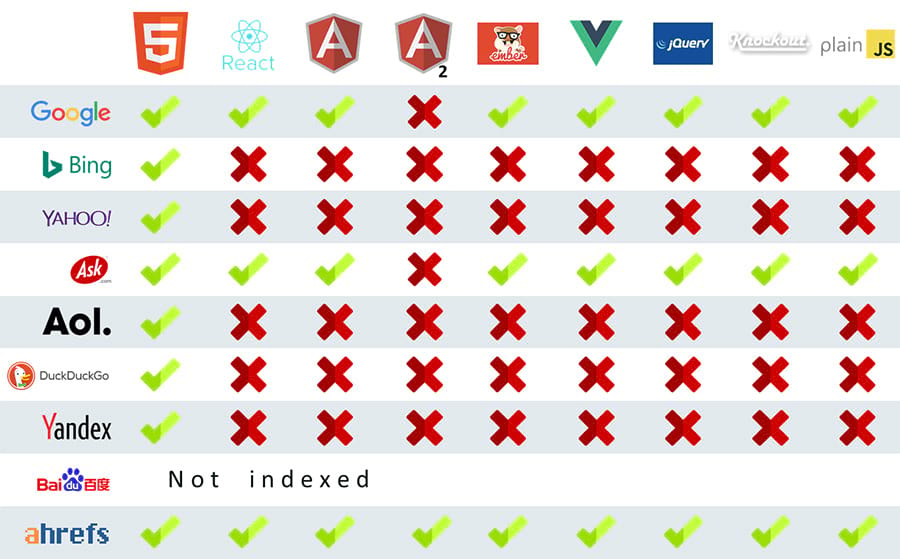

Except for Google and ask all the other major search engines are completely JavaScript-blind and won’t see your content if it isn’t HTML format.

Bing, Yahoo, AOL, DuckDuckGo, and Yandex are not properly indexed JavaScript-generated content.

Here are the results:

Source: Ahref

Google works by changing over HTML documents in the server into usable data for the web browser (client). Google officially stated the crawling and rendering of JavaScript-powered websites in Google Search.

The http://jsseo.expert/ proves that Google and Ask search engines crawl and render javascript pages and its links.

According to jsseo.expert analysis, JavaScript generated links weren’t crawled when JavaScript was set remotely. The jsseo.expert analyze Bartosz Góralewicz, CEO at Elephate, directed to check whether Google can deal with sites fabricated utilizing basic on JavaScript frameworks.

10. Does SEO auditing Tools render JavaScript (JS) the same way as Google?

No, Google is not giving a lot of information about the way they handle and render the JavaScript (JS). Google can handle and render the different JavaScript (JS) frameworks.

Does Ahrefs execute JavaScript (JS) on all web pages?

Yes, They render JavaScript (JS) on all web pages, Ahref JS Crawler renders all JS pages and JS Links. Ahrefs can crawl about 6 Billion of web pages per day and execute JS on about 30 Million of JS pages and give 250 Million JS links per day.

Conclusion:

Google and other web search engines will keep on enhancing their ability to render JS pages and links at scale. Obviously, the utilization of JavaScript to enhance interactively, user experience and render of javascript pages is just an expanding.

Everything considered it’s up to us as SEOs to all the more likely discuss this analysis with our developers when moving towards to new projects.

In any case, this will without a doubt reveal new obstructions to overcome as development techniques and thoughts wrap in this article are planned to give you an abnormal state of JavaScript SEO and the implications that are procreated it.

-

Instagram4 years ago

Instagram4 years agoBuy IG likes and buy organic Instagram followers: where to buy them and how?

-

Instagram4 years ago

Instagram4 years ago100% Genuine Instagram Followers & Likes with Guaranteed Tool

-

Business5 years ago

Business5 years ago7 Must Have Digital Marketing Tools For Your Small Businesses

-

Instagram4 years ago

Instagram4 years agoInstagram Followers And Likes – Online Social Media Platform